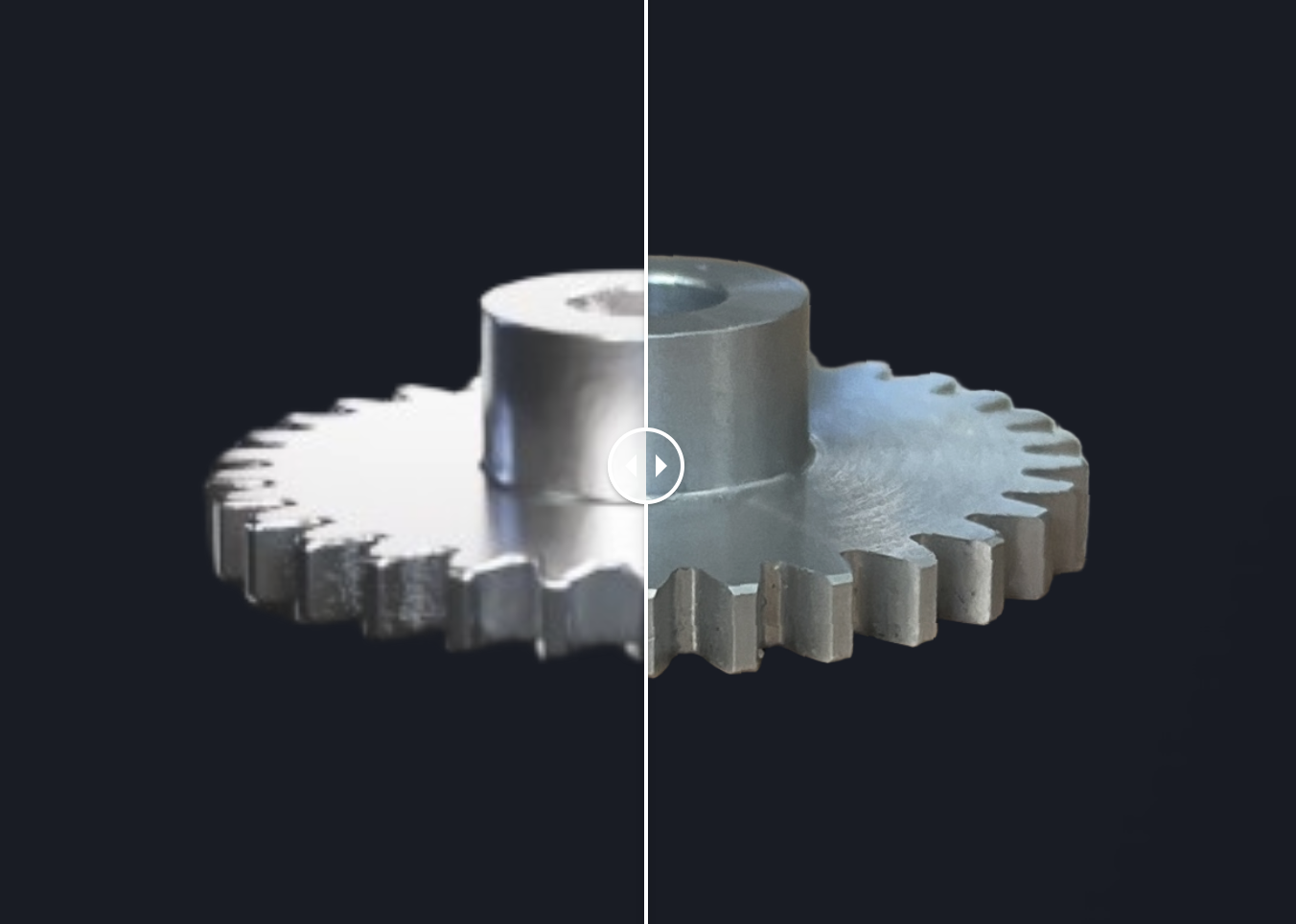

Synthetic data only works if it's close enough to the real thing. At Bucket, we generate thousands of photorealistic defect images from STEP files, but we need to measure how close those renders are to actual factory photos.

So, we built a visual similarity evaluation pipeline. It combines semantic embeddings (CLIP), structural comparison (SSIM), and feature-matching techniques (ORB + RANSAC + logistic regression) to compare synthetic and real part images. Each metric has strengths — but also breaks down under real-world conditions. To address this we developed a robust preprocessing pipeline.

CLIP: Model that encodes images (or text) into a vector embedding. The embeddings of two images can be compared using cosine similarity to determine the semantic similarity between them.

SSIM: Measures visual similarity between two images by comparing luminance, contrast, and structure (texture/visual layout) at corresponding points throughout the images.

ORB: Algorithm to extract key points and their descriptors from an image. The key points from two images can be matched, filtered, and compared.

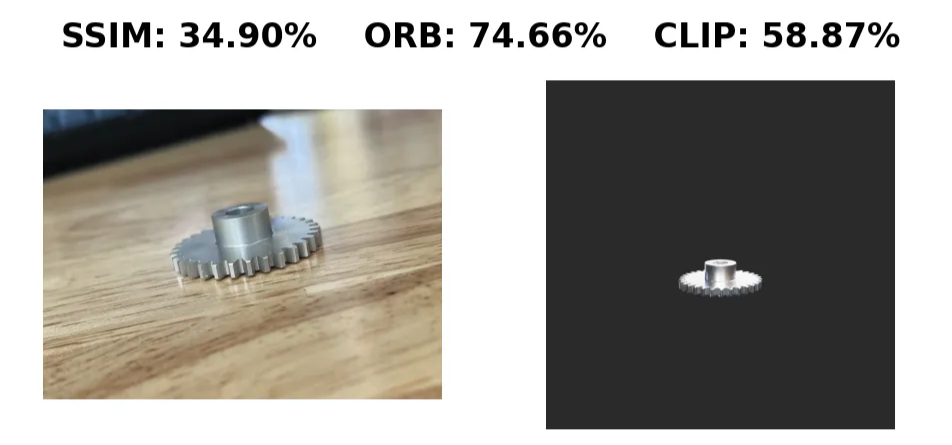

These three metrics all fluctuate based on things other than the part itself.

They’re highly sensitive to background clutter. CLIP encodes the background into the semantic meaning of the image. SSIM compares backgrounds just like it compares the part. ORB picks up background features as if they were part of the object, throwing off matches.

Orientation and framing matter too. If the part is slightly rotated or off-center, CLIP will treat it as a different object. SSIM expects pixel-aligned layouts. ORB’s descriptors depend on the patch size and resolution, so changes in scale or placement skew the results.

But here’s the thing: we're not building this for a lab. We can’t ask people, “no, that's wrong — move your part and retake the image.” We're building tools for messy, real-world conditions, and our comparisons have to hold up anyway.

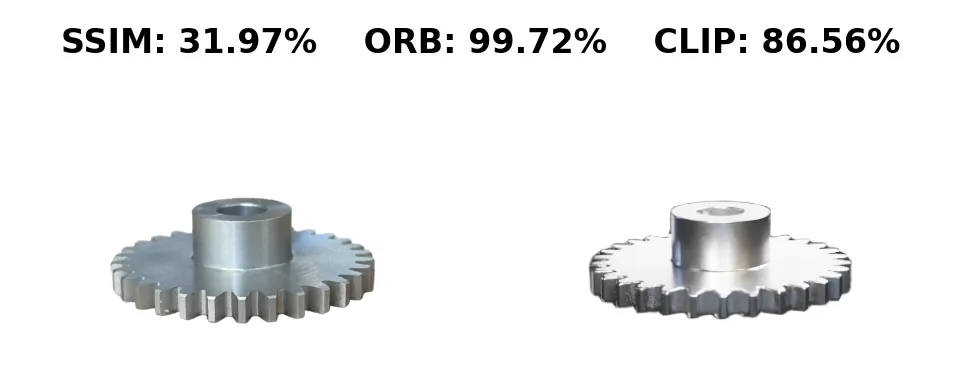

To do that, we needed a way to ignore everything except the part.

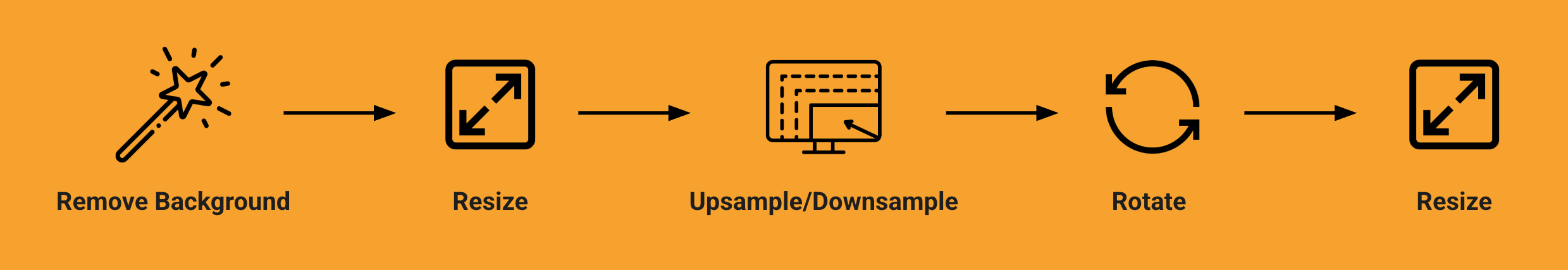

To ignore differences in the images beyond the part, we developed a preprocessing pipeline to standardize images.

With this preprocessing pipeline, our metrics now reliably measure the similarity between parts across different image conditions. Higher quality benchmarking enables easier iteration on rendering quality and establishes the foundation for developing a tool simple enough for customers to use independently.